Article

What should you know about the New European Artificial Intelligence Regulation?

August 13, 2021

In 2020, when the Commission published the white paper on AI, the priority was on human well-being as a priority at the European level. This AI was already seen as a tool that should thrive in an ecosystem of excellence, trust and reliability.

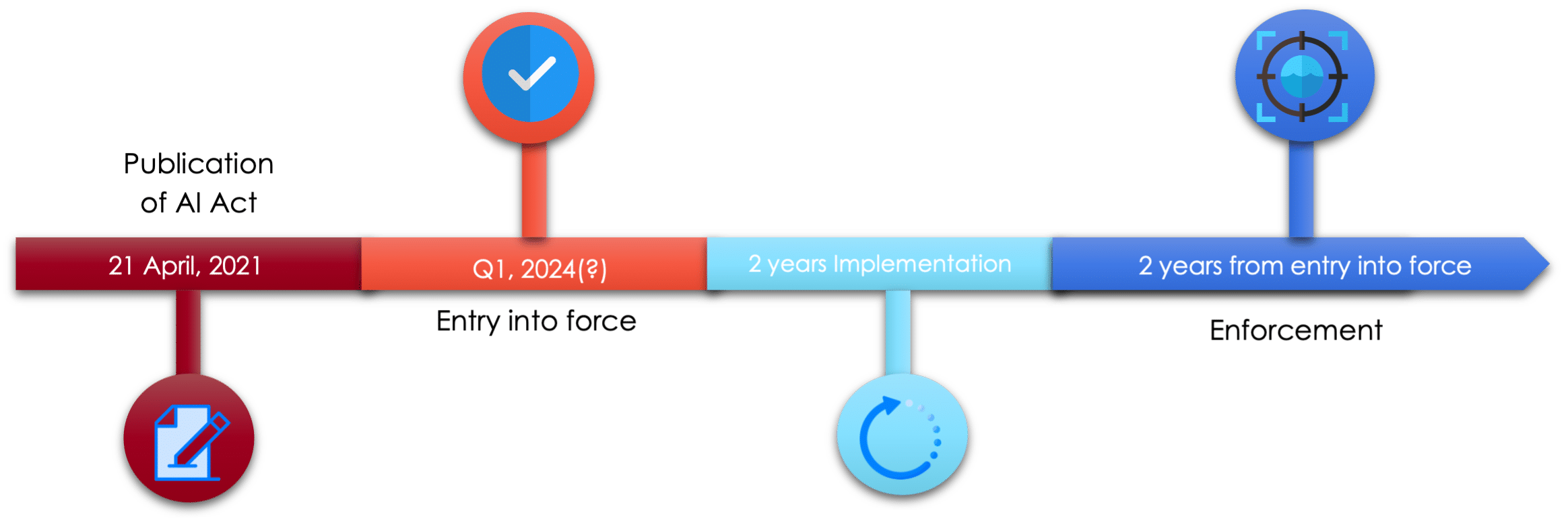

By publishing a new clear regulation on AI on April 21, 2021, Europe now intends to encourage companies to adopt AI governance and stimulate the development of technologies in the EU market.

The 4 main points of the AI Act

- A single broad definition of AI

- Risk-based approach

- AI system classification

- Impact on the healthcare sector

With the new Regulation, a broad definition of AI is intended to cover well-established automated decision-making systems.

Identify AI-related risks by providing additional safeguards or a framework alongside the requirements of the GDPR.

This regulation prohibits certain AI systems as they present clear risks to the safety and rights of individuals; for example, a system that manipulates individuals or groups (social scoring).

The AI Act classifies other AI systems as either high or low risk.

Based on a risk assessment methodology, the Commission will review and continuously update the list of high-risk AI systems used in specific pre-defined sectors.

- European authorities have spoken with one voice in calling for a virtual ban on the use of AI to infer a person’s emotions, which “is highly undesirable and should be banned except in very specific cases, such as certain health purposes”.

- The regulation classifies AI systems as “high risk” in any significant risk to the health and safety or fundamental rights of individuals, considering both the gravity of the potential damage and its probability to occur. In order to circulate in the Union market and assess their trust, these AI systems will have a series of strict mandatory requirements imposed on them.

- These matters necessarily involve a large number of professionals with knowledge of current legal standards and requirements, as well as in-depth expertise in AI technologies, data and IT, and experts in fundamental rights, health and safety risks.

- The AI Act provides a section devoted to innovation measures, where Article 54 (ii) of the AI Act specifically addresses the further processing of personal data for the development of certain AI systems in the public interest in the AI regulatory sandbox, including to:

(ii) “… public security and public health, including the prevention, control, and treatment of disease.”

FDA faces big challenges in the face of Covid-19

In the face of the Covid-19 pandemic, the healthcare sector was able to benefit from AI devices in the fight against the virus, particularly to detect patients most at risk of developing Covid-19-related complications. As a result, many hospitals are now building their own algorithms with patient data. With this in mind, the FDA has deemed useful to proceed with the regulation of AI tools in healthcare, where the main question arises as to how and what information will be communicated to patients and doctors on the use of these tools.

CONCLUSION

The recently proposal for a EU AI Regulation is the first of its kind in the world. It introduces new AI governance provisions to guarantee the fundamental rights of EU citizens while fostering innovation. Furthermore, to ensure its applicability, it relies on a important penalty system (up to 30million or 6% of worldwide annual turnover).

However, EDPB-EDPS joint-opinion raises some additional issues to address. For instance, it appears that AI systems related to biometric data, or systems presenting low risks are biased and are still not covered by the regulation. Hence, some work remains to be done to efficiently complement the GDPR.

This regulation, which is likely to impact quite a few actors who provide, use, etc. AI systems within the EU (but also outside the EU for any AI system affecting individuals in the EU), is not expected until the end of the ratification process, which can last between 2-5 years.

If you are an organisation operating in the Health sector looking for advice or additional information on this subject, contact MyData-TRUST. We will be pleased to assist you.

Manon Darms